Blog: Why Big Data Isn’t Working – and What You Can Do about It

The value any organization’s technology integration heavily depends on how well its big data feeds the digital transformation machine. To put it a little more simply: Big data enables digital transformation – well, that’s the goal anyway.

So how has big data technology been performing for businesses in the grand scheme of things? Turns out, not as great as hoped. The bullish expectations of big data might be getting ahead of our ability to actually properly execute full-scale big data.

According to a recent study by a British online advisory and consulting platform, Consultancy.uk, shows that 70 percent of big data projects are failing in the UK. The study goes on to say that nearly half of all UK organizations have attempted to undertake some sort of big data project or initiative. However, almost 80 percent of those companies have been unable to process data in its entirety.

This isn’t exactly breaking news, though. Almost three years ago, leading research and advisory firm Gartner reported something similar on a global scale, predicting that 60 percent of 2017 big data projects would fail to get past the early implementation stages. To make matters worse, the prediction turned out to be too conservative as 85 percent of big data projects ended up falling flat that year.

So why are so many initiatives falling short of expectations, and what can be done to increase the likelihood of measurable success when looking to drive value through big data projects?

Promise of big data

There’s a reason so many organizations are still taking on big data projects, though. Look to the five Vs:

- Volume and velocity – The data explosion; exponentially more data from more sources at a continually accelerating speed of creation

- Variety – The proliferation of mobile and IoT endpoints, traditional data types, and a massive increase in the amount of unstructured data

- Veracity – As the old saying goes, “Garbage in, garbage out.” The big data project is only as good as the data feeding it.

- Value – The white rabbit of big data. Discovering impactful insight or new value streams for the organization is the biggest challenge. It is the signifier of potential revenue and competitive differentiation. And value is the reason to get into big data in the first place.

The continued potential of analytics and the promise of deliverable outcomes has turned big data into a multibillion-dollar technology industry in less than a decade.

That’s a lot to do with this 2011 bold prediction on big data from McKinsey Global Institute: “Big data will become a key basis of competition, underpinning new waves of productivity growth, innovation, and consumer surplus—as long as the right policies and enablers are in place.”

The idea is that every company in just about every industry (retail, finance, insurance, healthcare, agriculture, etc.) is sitting on a goldmine of vast, varied, disparate, disorganized enterprise data trapped within legacy systems and infrastructure, and constantly being generated by the business. And to get to this treasure trove of information, every company needs specialized access and analytic tools to properly connect, organize, and, ultimately, translate into an ingestible and analyzable form.

Assuming success, big data infrastructure is expected to deliver:

- Connect and unify all data sources

- Generate powerful insights for the business

- Allow for predictive decision making

- Create more efficient supply chains

- Deliver meaningful ROI

- Transform every industry across the board

And while uncorking the potential of big data has proved successful in several cases (mostly among massive global companies and brands), the desired big data end state for most organizations is proving to be a struggle.

Meaning of big data in 2018

But now that we’re about halfway through the year, what exactly does “big data” even mean in 2018? If we had to use one word, it would be “analytics.” The ability to effectively tap big data for value comes down to the organizational ability to run analytics applications over the data, often in a data lake. This assumes the challenges of volume, velocity, variety, and veracity are solved – a measurement of data readiness.

Data readiness paves the way to predictive analytics. And data readiness is built on the quality of the big data infrastructure used to power business and data science analytics applications. For instance, any modernized IT infrastructure must support data migration linked with technology upgrades, integrated systems and applications, with the ability to transform data into the desired formats as well as reliably move and integrate data to a data lake or enterprise data warehouse.

Three biggest big data technology challenges

So why are so many big data infrastructures collapsing so early in the implementation lifecycle? It all comes back to the last (and very telling) part of that 2011 McKinsey quote on big data: “As long as the right policies and enablers are in place.” Here are a few reasons big data projects don’t get off the ground:

1. Lack of skillset

Despite machine learning, AI, and the increase of applications that work without humans, the imaginative force driving big data projects and queries are data scientists. These “enablers” that McKinsey refers to represent a high-demand and therefore rare skill set in the market. Big data technology continues to influence the hiring market, and in many cases big data developers, engineers, and data scientists are an on-the-job, learn-as-you-go process. Many tech companies are putting more importance on creating and training more data-related positions to leverage big data principles. It’s projected that 2.7 million people will have data-connected jobs by 2020, with 700,000 of those positions specific to big data science and analytics roles pointing to a highly competitive and expensive hire.

2. Cost

The big data analytics industry is worth almost $125 billion and is only expected to grow. That translates to expensive costs for a big data implementation project that include set-up fees and recurring subscription payments. Even as the technology advances, and the barriers to entry are lowered, the initial costs of big data, especially for small- and mid-sized businesses may put the project out of reach. Investment may require traditional consulting, outsourced analytics, in-house staffing, and storage and analytics software tools and applications. And the varying cost models either are too expensive or offer features of a minimum viable product to really deliver any real results. But first and foremost, a company that wants the proper big data implementation must prioritize architecture and infrastructure

3. Data integration and data ingestion

Before big data analytics can be performed, data integration must occur, meaning data of all sorts (legacy, operational, and real-time) needs to be sourced, moved, transformed, and provisioned to big data storage applications, with technology that guarantees security and control throughout the process. Modern integration technology that connects systems, applications, and cloud technologies helps organizations to produce a dependable data gateway that also overcomes data movement challenges. Companies struggling to modernize systems and deploy a strategy to integrate data from various sources should be inclined to adopt a B2B-led integration strategy that delivers better value by focusing on the partner ecosystems, applications, data stores, and big data analytics platforms that ultimately drive business. Centralizing integration and data flows through a B2B-led integration strategy allows:

- Ingestion to data lake applications

- Multi-cloud connectivity for flexible and versatile data provisioning for analytics purposes and to optimize storage TCO

- New, internal applications, cloud, and business partner data to be integrated faster and with greater reliability

- Complete, end-to-end management and on-demand auditing of any data transaction

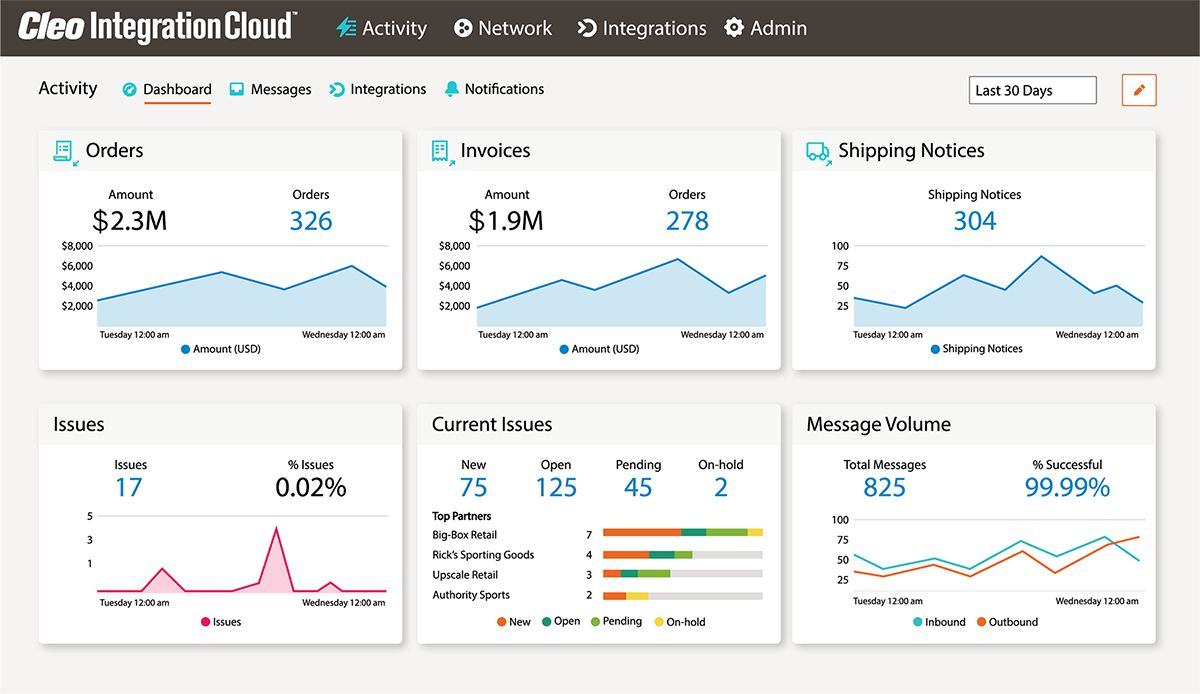

- Real-time visibility into every data transaction, with automated notifications and alerts

- 100 percent uptime on efficient, scalable operational data flows

Yes, today’s big data is synonymous with analytics, but don’t forget the infrastructure. When it comes down to it, big data success is based on efficient and seamless data transformation policy: Easily moving in, out, and through systems, feeding data lakes and provisioning analytics apps, which, in turn, convert raw information into valuable business intelligence. And when companies realize what system limitations are stalling big data implementation projects, that’s when they can begin to properly integrate businesses, people, applications, the cloud, and data repositories.

About Cleo