Blog: From Big to BIG: The Shifting Big Data Landscape

How the so-called data explosion means rethinking data infrastructure

“Knowledge is power. Information is liberating.” — Kofi Annan

Central to the premise of big data is the idea that data, which includes an exceptional growth in its variety of forms, can be transformed into information that is coherent, usable, and useful to companies: Information empowers a business with knowledge so human intelligence can determine the how and when to act. Big data insights are derived from mining, predictive analysis, and pattern of life analytics, just to name a few. They liberate the enterprise, helping to forge new pathways to profitability, while delivering ever-increasing value to customers.

In theory, this sounds great! It also explains why an estimated 73% of enterprises have a planned big data investment either now or shortly up the road ahead. At the same time, the theoretical efficacy of any big data project discounts the continuous challenges companies face adding viability to their big data heap.

There is new meaning in the word BIG as it applies to big data today

Historically, data has always been big, even when information was measured in easily quantifiable bits and bytes. This is because the word big was always relational to the power of the architecture handling compatible flows of electronic data. So as machines grew in the capacity exhibited by the virtual rate Moore’s law projected, digital data density maintained a kind of equivalence. In other words, there was an acceptable parity between the requisite volume of data and the power required of systems to handle data migration, storage, and processing. The outset of the so-called data explosion, on the other hand, has resulted in a shifted sense of the word: BIG.

Big data is really an aggregate of large and small information assets

Business data is no longer relegated to transactional and operational information. Compositional sources of big data include millions of hourly e-commerce purchases, hundreds of millions of connected mobile devices with users on the go, the interconnectivity of countless posts and interactions on social media platforms, and billions of daily search engine queries. Furthermore, the unparalleled expansion of geospatially disparate endpoints through the proliferation of IoT connectivity means data creation is in a catalytic state of volume escalation. In fact, the estimated number of bits of digital data in existence will surpass the number of stars in the universe by 2020. To put that on a more human scale, that’s 100 billion times more than the number of grains of sand on the Earth. (Well, so much for a human scale analogy.) So as data propagation outpaces system capacity and performance, many enterprises, with accompanying infrastructural limitations due to aging, disparateness, or the need for continual manual scripting, find the data explosion hard to embrace.

The only challenge a company will not face in any big data initiative is data generation

Doing anything with big data generally means big strategic questions. These include:

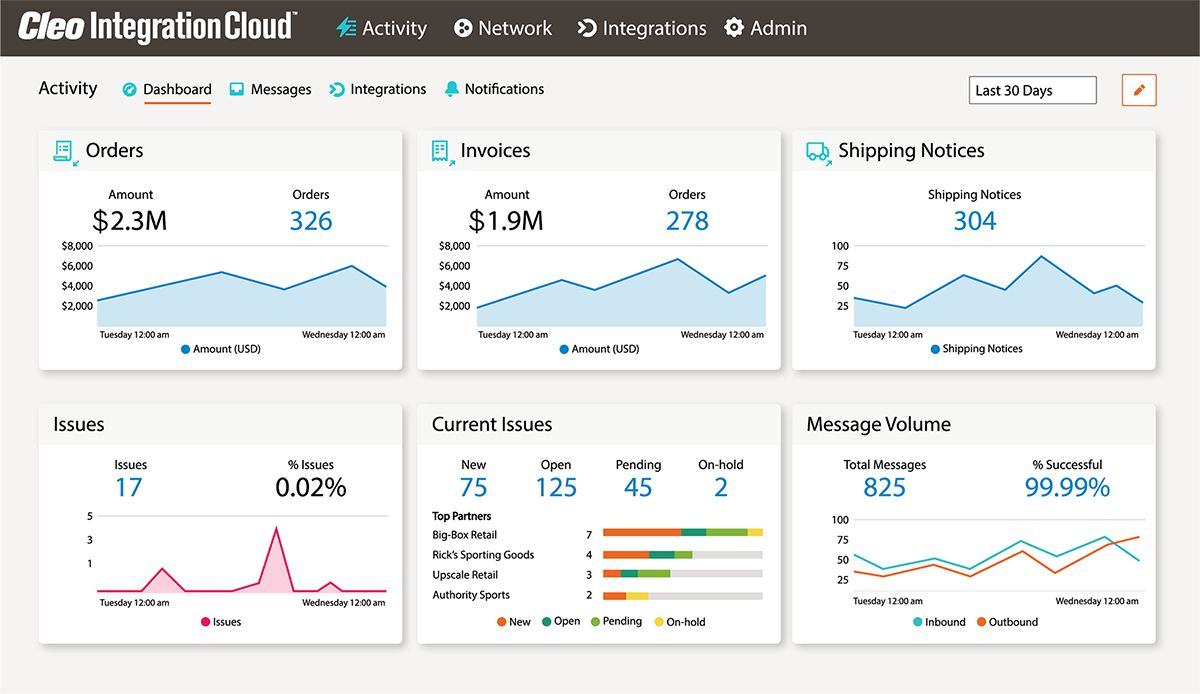

- Ingestion: How will I get raw data to flow from millions of endpoints into storage and staging systems?

- Processing: How will my clustered and segmented data move from data warehouses and data lakes into analytical programs for diagnostic, predictive, and prescriptive processing?

- Management and access: How will our enterprise big data administrators manage information asset input, movement to processors, and access to analytical activity commodities?

Effectively leveraging big data requires optimal reliability in data movement and data integration along the entire data pipeline. Any company that fails to invest in secure and scalable big data infrastructure to ensure efficient and rapid data ingestion, and migration to, from, and within systems will face added difficulty realizing a viable ROI from a big data investment.

Working big data miracles requires more than data alone

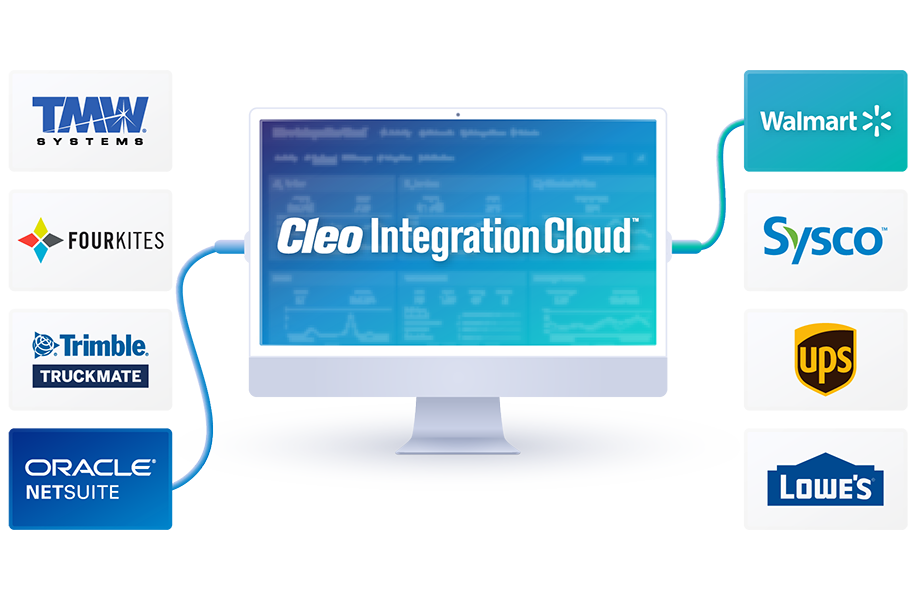

Smart integration implies consolidation — migrating disparate legacy communications systems and business processes onto a quickly deployable single-platform solution. By integrating your data from individual data endpoints through to analytical output using a scalable and secure gateway solution, it will flow quickly and smoothly. Business leaders can more effectively realize value utilizing pattern generation and predictive analytics to make stronger calculated decisions. And securely moving big data means the ability to rapidly address demand and situational fluctuations by adjusting operational activity to match. Functional insight goes further to help lower overhead, cut costs, and efficiently streamline production practices from conception through to the customer.

To guarantee your analytics initiative is moving in the right direction, big data gateway solutions ensure the seamless flow of structured, multi-structured, and unstructured big data across all systems enabling strategic business partnerships, delivering increased value to your customers, and optimizing operational processes across the enterprise.

About Cleo

Watch a Demo

Comprehensive Guide to Gaining B2B Control

Duraflame Case Study